Microsoft Graph Connector crawling using Azure Functions

19 Jul 2020Introduction

It has been a while since Microsoft Graph Connectors went into public preview. It is a great way to index third party data to appear in Microsoft Search results. While Microsoft provides a couple of in built connectors to index data from sources such as Azure DevOps, ServiceNow, File Share, etc., to index data from any other custom service a connector can be built in Microsoft Graph that creates a connection and pushes items to the Microsoft Search index.

The basic steps involved in setting up a connector include making some Graph API calls for creating a connection, registering a schema that describes the type of external data and finally indexing the data. Detailed information regarding these API calls can be found here

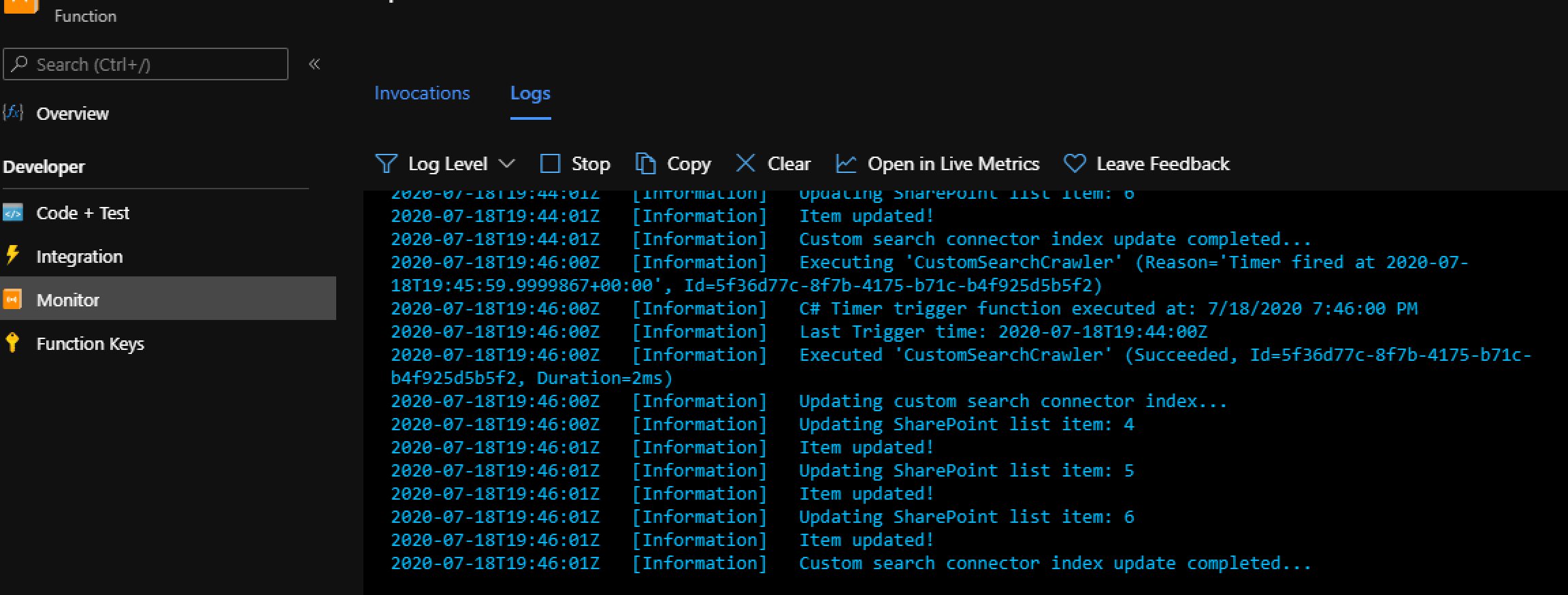

The above mentioned steps help in the one time setup and indexing of external data in Microsoft Search index. However, for regular update of data an appropriate crawling mechanism needs to be implemented because currently there is no such provision available by default for connectors other than the Microsoft built connectors. This article describes one such approach for scheduled crawling of external data using an Azure timer-triggered function.

Azure Function Crawler

To keep the connector’s external data up to date, the search indexing API calls need to be triggered at a regular schedule. This can be achieved using timer-triggered Azure functions. Depending on the amount of data and business requirement either a full crawl or an incremental crawl can be setup.

The below approach shows how to implement crawling of external data using a dotnet function. For this sample, the external data being indexed in Microsoft Search is coming from a SharePoint list but the approach can be modified to fetch data from any other custom service as well.

Prerequisites

Setup the custom connector

- Register an Azure AD App with

ExternalItem.ReadWrite.Allapplication permission on Microsoft Graph. Also create a Client Secret for the app and make a note of it along with App Id and Tenant Id. - Create a connection

- Register a schema for the type of external data (For example,

Appliancesdata as shown in below mentioned GitHub sample) - Create a vertical

- Create a result type

Refer to the sample https://github.com/microsoftgraph/msgraph-search-connector-sample for setting up the connector and registering schema

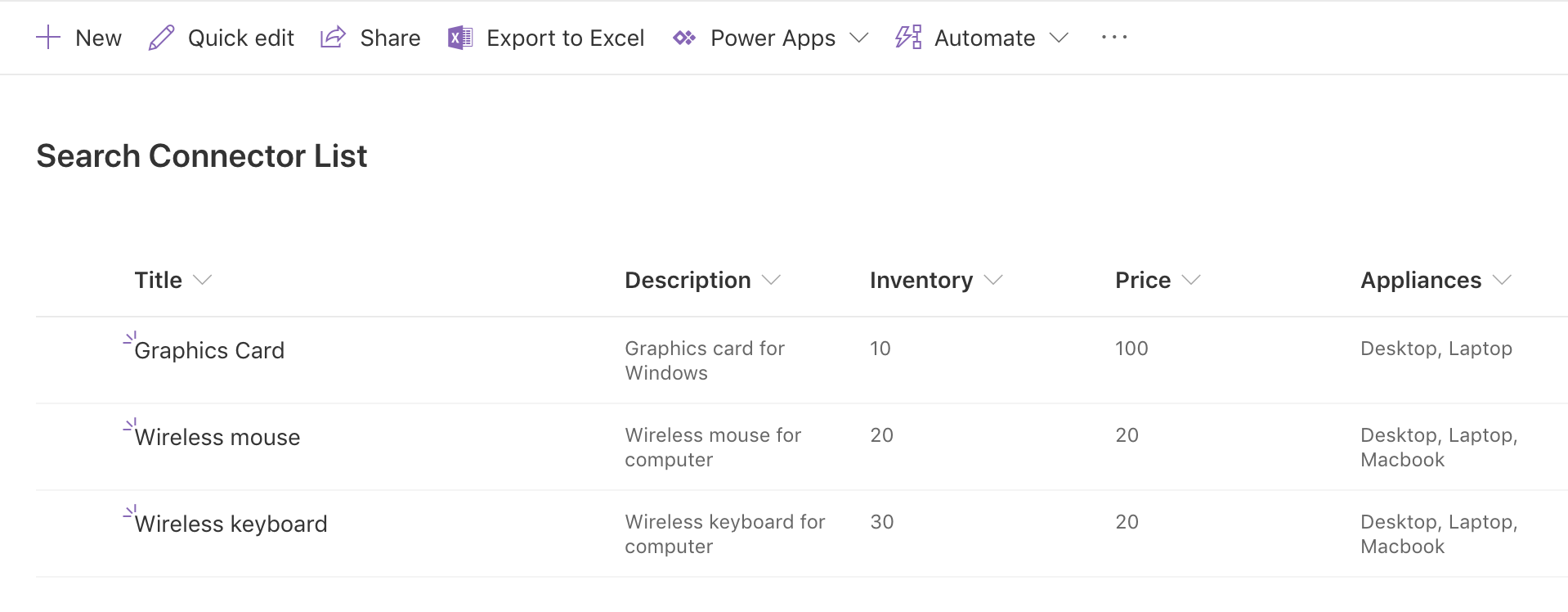

Create a SharePoint List

Create a SharePoint list with the below columns (in accordance with the schema registered) that will serve as the external data source.

Titleof typeSingle line of TextDescriptionof typeSingle line of TextAppliancesof typeSingle line of TextInventoryof typeNumberPriceof typeNumber

Azure Function

- Create a timer triggered Azure function using VS Code as specified at this link

- Add the Nuget packages for

Microsoft.Graph.BetaandMicrosoft.Identity.Clientusing the below commandsdotnet add package Microsoft.Graph.Beta --version 0.19.0-preview dotnet add package Microsoft.Identity.Client --version 4.16.1 - For local development, update the

local.settings.jsonfile with the below configuration updating the required Ids, secrets and CRON schedule. Once deployed to Azure Function App add the same values as application settings.{ "IsEncrypted": false, "Values": { "AzureWebJobsStorage": "<STORAGE_ACCOUNT_CONNECTION_STRING>", "FUNCTIONS_WORKER_RUNTIME": "dotnet", "schedule": "<CRON_SCHEDULE>", "appId": "<APP_ID>", "tenantId": "<TENANT_ID>", "secret": "<APP_SECRET>", "connectionId": "<CONNECTION_ID>", "siteId": "<SITE_ID>", "listId": "<LIST_ID>" } } - Create the below

MSALAuthenticationProvider.csclass that will be used for authentication by theGraphServiceClientobject

- Create the below

GraphHelperclass that contains methods for fetching SharePoint list items and updating the search index usingGraphServiceClient- The

AddOrUpdateItemmethod adds/updates an item to the search index - The

GetIncrementalListDatamethod fetches the items updated since a particular time (last trigger time of the function) and is used for incremental crawling - The

GetFullListDatamethod fetches all the items in the SharePoint list and is used for setting up full crawl

- The

- Update the function class with below code that calls the methods to get SharePoint list data and update the search index

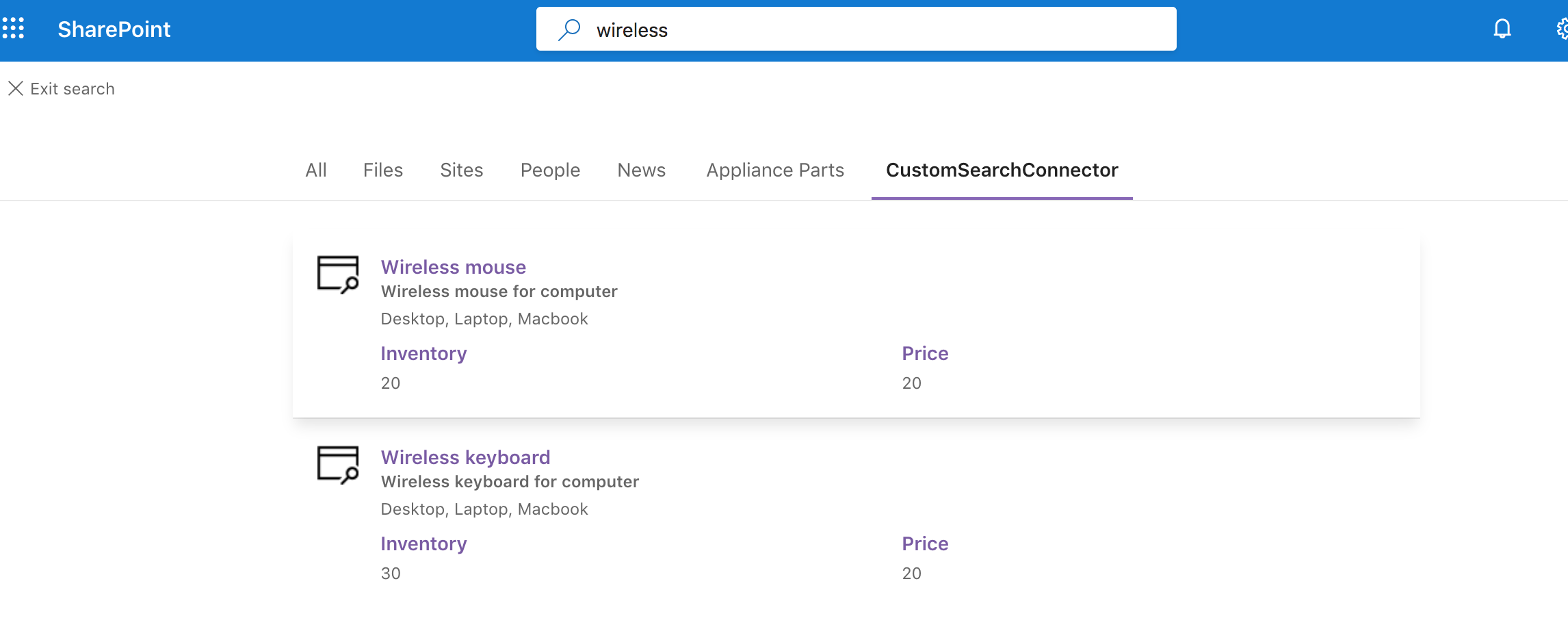

- Add/Update an item in the SharePoint List and check if the search results return the updated item results after the function is triggered

The entire source code for this sample is available at this link

Conclusion

In this way, Azure functions can be used for scheduled crawling of external data in Microsoft Search to ensure that latest data is available in the search results. The above sample can be extended to deal with data from other sources and handle source item deletion scenarios as well.